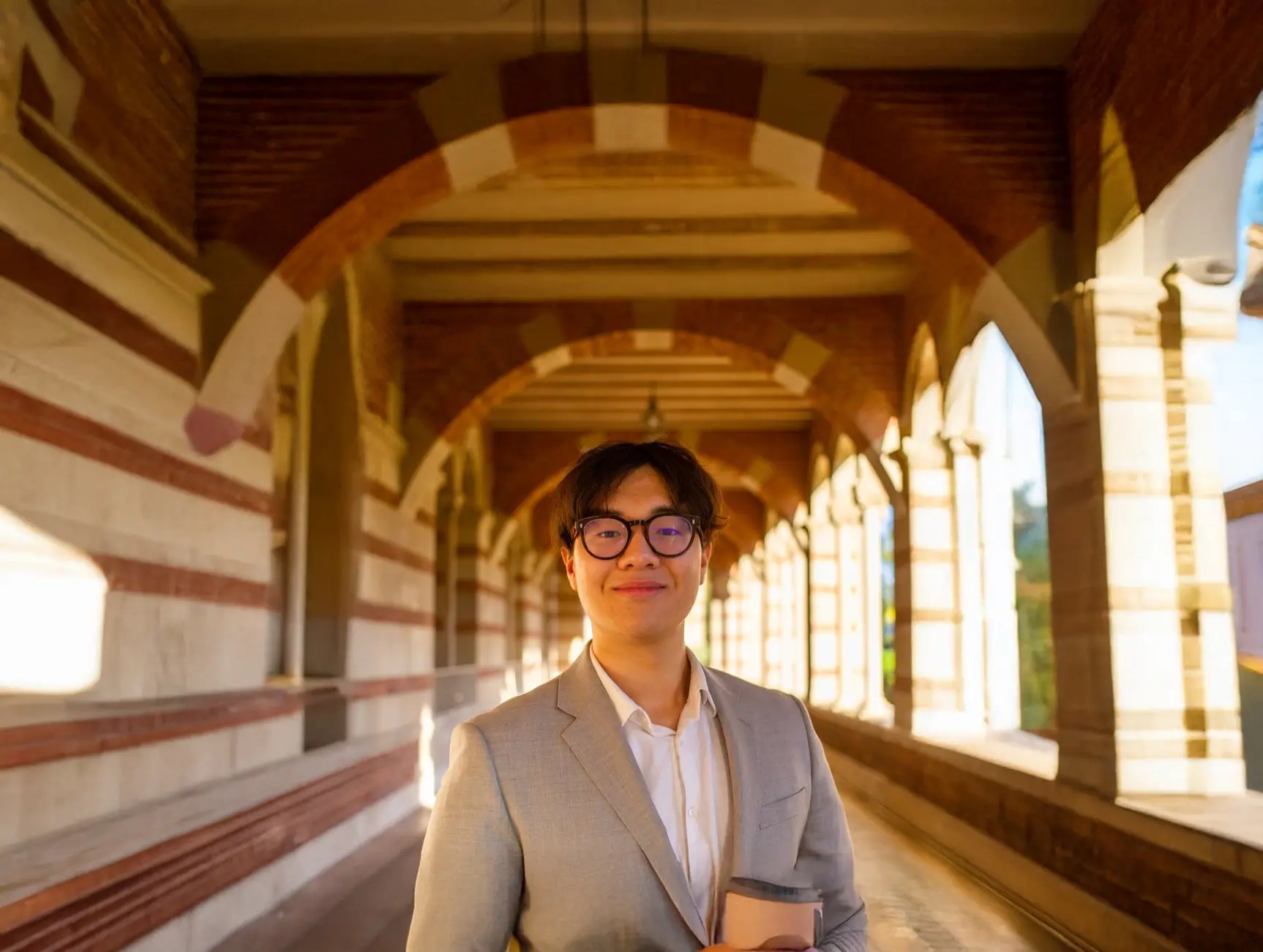

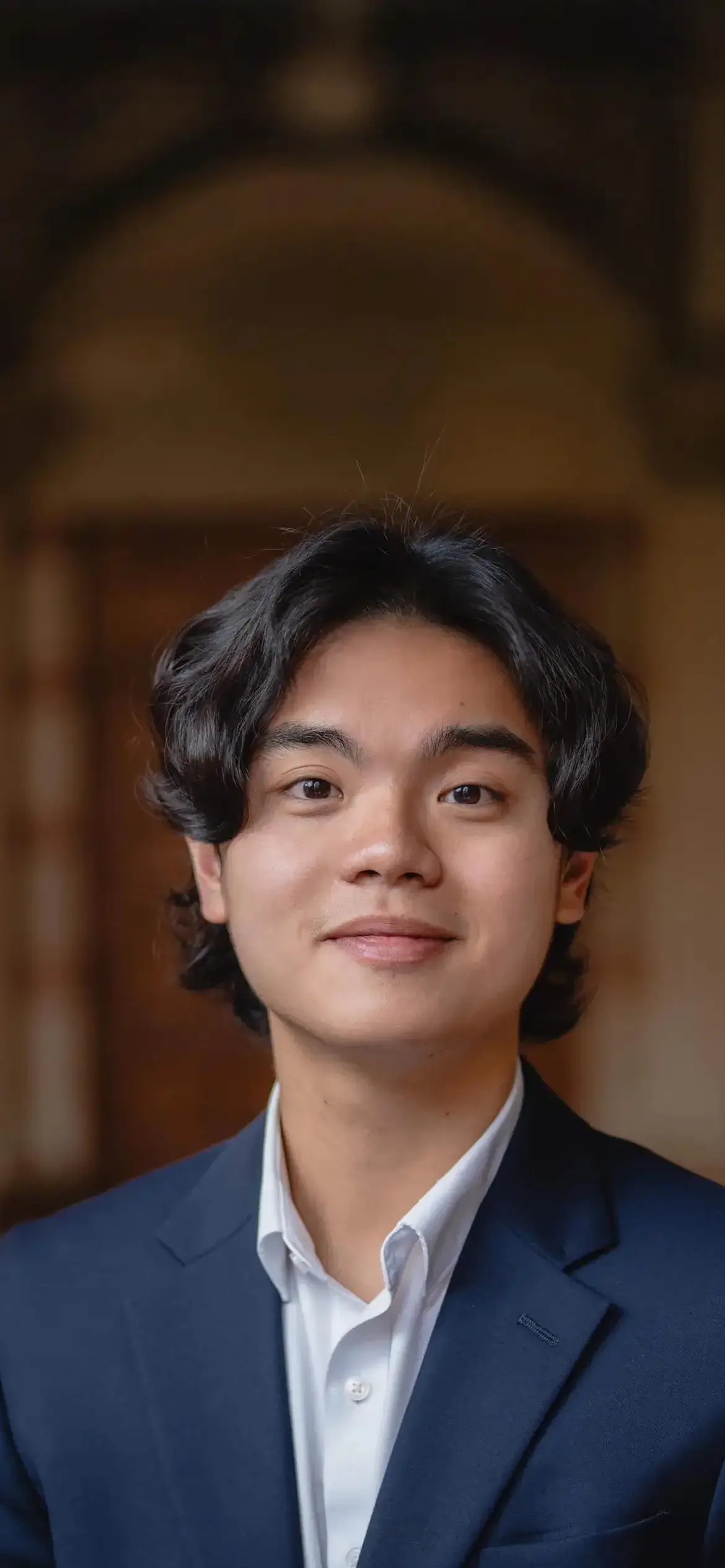

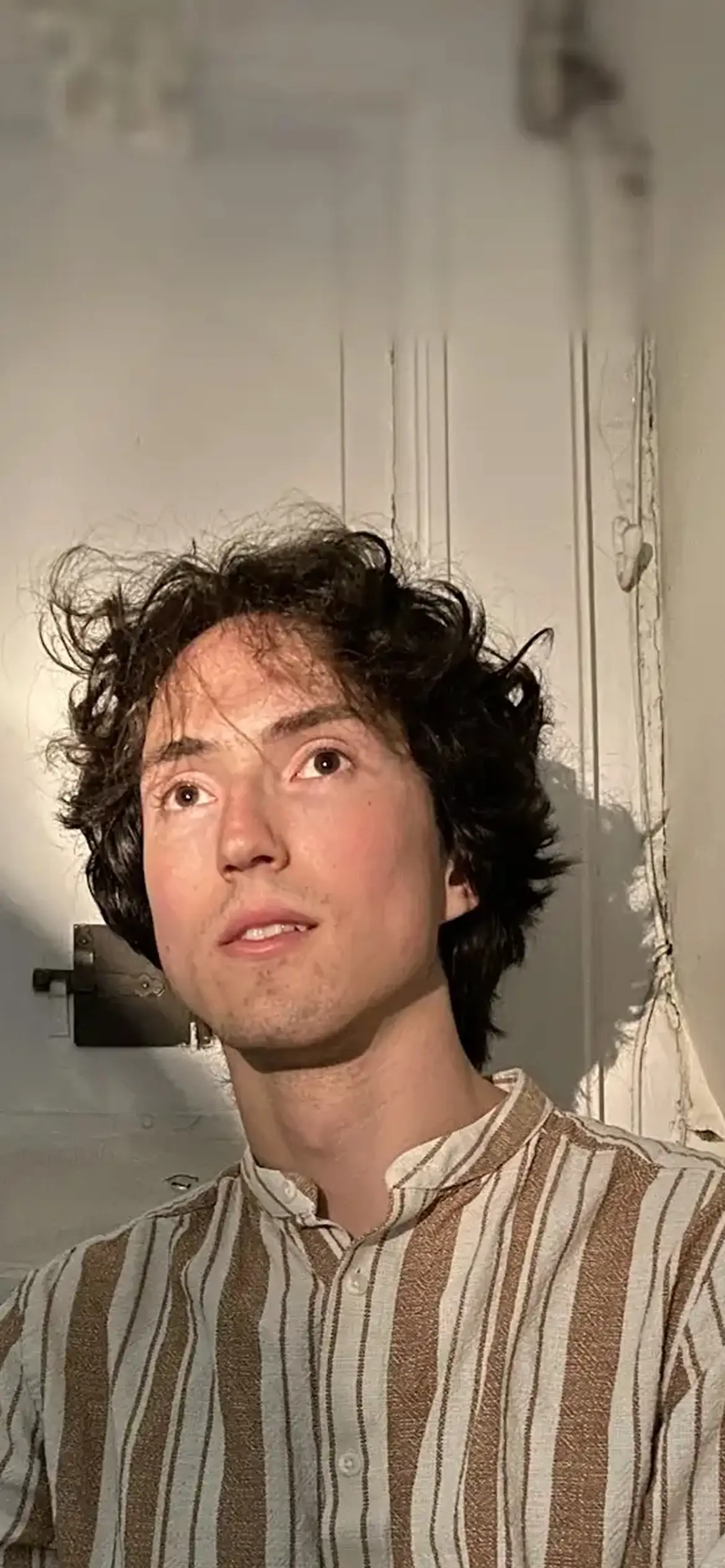

Meet our founders

Trust and safety aren’t just requirements in the legal field, they form the bedrock of a lawyer’s code of ethics. We built Truth Systems to be the infrastructure that lets lawyers uphold those principles across every AI tool they use.

What excites me is that we can design future guidelines for AI use that are both iterative and programmatic, with AI serving as both the subject and the infrastructure of governance. This is a vision where adoption happens not in spite of, but because of safety.

My experience building one of the largest legal AI tools at Legora taught me that adoption often falters not because of the software, but because of the missing infrastructure to keep it safe. We are here to make compliance effortless and let innovation truly take its reign.